Computing Cluster

A computing cluster is a file of the servers united by some communication network. Each computing node has the operative memory and works under control of the operating system. The most widespread is use homogeneous clusters, that is such where all nodes are absolutely identical on the architecture and productivity.

For everyone cluster there is an allocated server - the operating node (frontend). On this computer the software which makes active computing nodes at start of system is established and operates start of programs on cluster. Actually computing processes of users are started on computing nodes, and they are distributed so that on each processor it is necessary no more than one computing process.

Users have house catalogues on an access server - a sluice (this server provides communication cluster with an external world through corporate a LAN or the Internet), direct access of users on the operating node is excluded, and access on computing nodes of cluster is possible (for example, for a hand control problem compilation).

A computing cluster, as a rule, works under control of one of versions of OS Unix - the multiuser multitask network operating system. In particular, in the IC of NAS of Ukraine clusters work under control of OS Linux - freely extended variant Unix. Unix has a number of differences from Windows which usually works on personal computers, in particular these difference concern the interface with the user, works with processes and file system.

There are some ways to involve computing capacities of cluster.

To start set of uniprocessor tasks. It can be a reasonable variant if it is necessary to spend set of independent computing experiments with the different entrance data, and term of carrying out of each separate calculation has no value, and all data takes places in a memory size accessible to one process.

To start ready parallel programs. Free or commercial parallel programs which if necessary you can use on cluster are accessible to some tasks. As a rule, for this purpose it is enough, that the program was accessible in initial texts, is realised with interface MPI use in languages С/C ++ or the Fortran.

To use the programs from the parallel libraries. For some areas, e.g., linear algebra, libraries which allow to solve a wide range of standard subtasks with use of possibilities of parallel processing are accessible. If the reference to such subtasks makes the most part of computing operations of the program use of such parallel library will allow to receive the parallel program practically without a writing of own parallel code. An example of such library is SCALAPACK which is accessible to use on ours clusters.

To create own parallel programs. It is the most labour-consuming, but also the most universal way. There are some variants of such work, in particular, to insert parallel designs into available parallel programs or to create from "nothing ” the parallel program.

Parallel programs on computing cluster work in message transfer model - message passing. It means that the program consists of set of processes, each of which works on the processor and has the address space. And direct access to memory of other process is impossible, and data exchange between processes occurs to the help of operations of reception and a parcel of messages.

That is process which should obtain the data, causes operation receive (to accept the message), and specifies, from which process it should obtain the data, and process which should transfer the data to another, causes operation send (to send the message) and specifies, which process needs to transfer this data. This model is realised by means of standard interface MPI.

There are some realisations MPI, including free and commercial, transferred and focused on a concrete communication network. On SCIT-1 and SCIT-2 clusters we use the commercial realisation Scali (for interconnect SCI) and free OpenMPI (for interconnect Infiniband).

As a rule, MPI-programs are constructed on model SPMD (one program - many data), that is for all processes is available only one code of the program, and various processes store the various data and carry out the actions depending on a process serial number.

For acceleration of work of parallel programs it is necessary to take measures for decrease in an overhead charge for synchronisation at data exchange. Combination of asynchronous transfers and calculations will appear probably, comprehensible approach. For an exception of idle time of separate processors it is necessary to distribute in regular most intervals calculations between processes, and dynamic balancing in certain cases can be necessary.

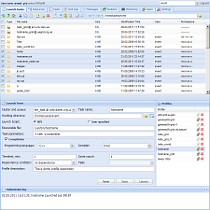

The important indicator which speaks about, whether it is effective in the program parallelism is realised, loading of computing nodes on which the program works is. If loading on all or on a part of nodes is far from 100 % - means, the program inefficiently uses computing resources, i.e. creates the big overhead charge for data exchanges or non-uniformly distributes calculations between processes. Users od SCIT-1 and SCIT-2 can look loading through the web interface for viewing of a condition of knots.

In certain cases for understanding, in what the reason of low productivity of the program and which places in the program it is necessary to modify to achieve the productivity increase, it makes sense to use special means of the analysis of productivity – means of profile and trace analysis.